Current Computer Science Research

Question Generation and Question-answering Systems

Learning research indicates that students retain and apply knowledge better by recalling and practicing what they have learned rather than reading textbooks multiple times. This approach is referred to as retrieval practice. To support retrieval practice, we need a large question bank. Better yet is a mechanism for automatically generating an unlimited number of questions.

We are developing several algorithms for automatically generating questions. We are also developing a general framework for auto question generation/answering and associated algorithms. We expect the framework to be versatile enough to be used across several different domains. We are working towards identifying and validating patterns for question generation and answering.

A related question generation/answering issue is answer validation. We explain answer validation in the context of the Structured Query Language (SQL), the ISO/ANSI standard for querying relational databases. Declarative features of SQL enable even novices to write database queries with minimal effort. However, often users erroneously conclude that a SQL query is correct simply because the query compiles, executes, and fetches data. In the Big Data context, manual emph{verification and validation of SQL queries} is impractical. However, validating SQL queries is critical to ensuring correct data is used for decision-making.

Our current work in this area involves automatically generating database queries using SQL Ontology and generating a structured English narrative for a given SQL query.

Computational Analysis and Understanding of Natural Languages

Language is a miracle of human life. Linguistics is the science of language. Linguistics research is concerned with answering many questions about language. Computational linguistics (AKA Natural Language Processing (NLP) is the application of computing technologies for analyzing and understanding human languages. NLP focus has moved from language analysis to language understanding. Especially the rise of deep learning algorithms and their pervasive effect is culminating in the development of innovative applications and information systems (e.g., spoken language interfaces and chatbots) that leverage natural language understanding. This field is poised to dominate in the next several years and is often dubbed the next big wave in computing.

Natural language analysis and understanding systems (both written and spoken) are entering a broad spectrum of applications and information systems ranging from washing machines to self-driving cars and travel information systems. The synergistic confluence of linguistics, statistics, and database technologies is the underlying driving force.

In recent years, there were dramatic advances in analyzing and understanding natural languages using big data and machine learning techniques. However, much of the research in this area is confined to very few languages — resource-rich languages such as English, Japanese, and Chinese. On the other hand, according to Ethnologue (https://www.ethnologue.com/), there are 7097 known living languages. Languages are as diverse as their commonality and entail practical and challenging research problems.

Our research in this area focuses on written language aspects of computationally resource-poor languages such as Telugu, which is the official language of two states in India. Telugu is one of the classical Indian languages with vast literature, richness, and melody. Our work focuses on corpus creation to enable corpus linguistics research.

Natural Language Interfaces

The volume of human language data currently generated in digital form is massive. For example, about 16 years of video is uploaded to YouTube daily. Searching for a speaker in these videos is difficult, given the data volume and query latency requirements. With the ubiquity of hand-held computing devices such as tablets and smartphones, voice-based interfaces will emerge as the natural medium for interaction with databases and information systems.

For the web and enterprise search engines, content is produced in multiple languages, especially in multi-national companies. It is not far-fetched for a search user to issue a query in one language and expect to retrieve relevant documents authored in multiple other languages, which are automatically translated into the language in which the query is issued.

In a related development, currently, there are over 350 systems for data management, and new ones are introduced routinely. They use different data models, some do not provide database transactions, while others do not use SQL as the query language. Various names, including NoSQL, NewSQL, and non-RDBMS refer to them. Often, organizations employ various types of NoSQL systems. Given the query language diversity, a natural language interface to NoSQL systems will help information systems developers and end-users.

We are investigating the natural language interfaces to NoSQL databases.

Data Quality Issues for Data Science

Risks associated with data quality in data-intensive systems have wide-ranging adverse implications. Many organizations still do not have accurate, timely, and useful data for operational and strategic decision-making. Failures caused by data quality are not only expensive but also embarrassing. Published examples include incorrect payment of taxes, incorrect credit reports, and rebates due to incorrect product labeling. Data quality is a systemic problem across various industries, including financial services, healthcare, transportation, manufacturing, and communications.

Most current research in data quality primarily focuses on the proverbial harvesting of low-hanging fruit — storing and processing high-volume and streaming data using high-performance computing ecosystems such as Hadoop and Spark. The biggest problem for data-intensive applications and data analytics is data quality assurance and securing data. Gartner reports that poor data quality is the major factor impeding the implementation of advanced data-intensive information systems.

Though data quality issues have been studied for over two decades in the context of corporate data governance, enterprise data warehousing, and the Web, they are either inadequate or inappropriate for developing data-intensive information systems. In the era of Big Data, data quality problems are exacerbated by data heterogeneity, duplicate data, inconsistent data, incomplete data, record linking, and data integration.

Our current research addresses some of the above issues. The proposed solutions include integrating disparate data types from multiple data vendors, defining and assessing data quality in machine-generated unstructured data, and developing a data quality-centric application framework for extracting value from Big Data for machine learning-driven systems.

Personalized Learning

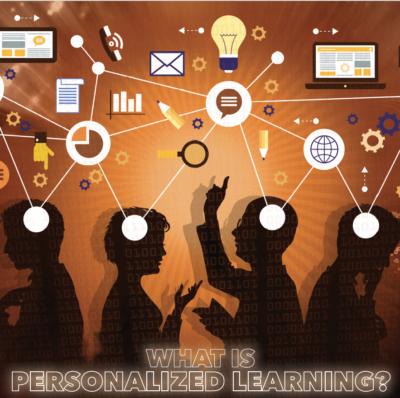

The National Academy of Engineering of The National Academies has identified advancing personalized learning as one of the fourteen grand challenge problems. Personalization is a means to address diversity in student learning through inclusive and innovative pedagogy. It is also a means for just-in-time learning in both formal and informal environments. In addition to content creation for teaching and learning, personalized learning poses challenges for automated question generation, interactive and incremental scaffolding, and assessment.

Facets of our research in this area include structuring and authoring content, automated ontology construction, user modeling, algorithms for automated question generation/answering, and contextualized scaffolding. For example, content creation requires the authoring of topics at a fine granular level. A topic is the smallest standalone unit which is devoid of context. The freedom from context enables weaving teaching and learning content for different audiences and purposes. Each topic features inclusive and innovative pedagogy and pre-, post-, and practice tests and a chatbot for answering routine questions.

We are also developing an Interactive System for Personalized Learning (ISPeL). ISPeL is an analytics-driven web application for the delivery of personalized learning. ISPeL brings together our investigations in ontology construction, topic modeling, user modeling, question generation/answering, and learning research into a practical software system.

ISPeL achieves personalization via a Directed Acyclic Graph (DAG). Topics comprise the vertices of DAG, and prerequisite dependencies between the topics correspond to the edges. ISPeL enables learners to traverse the DAG in any order, constrained only by the topic prerequisite requirements.

ISPeL keeps track of individual learners’ progress and performance on pre- and post-tests associated with the topics. Learner progress and performance dashboards make it easy to assess learning. ISPeL is our newest research project.

Machine learning is the scientific study of algorithms and statistical models that computer systems use to progressively improve their performance on tasks such as detecting spam email, recognizing handwritten digits and speech recognition.

The goal of personalization is to address diversity in student learning. Personalization of learning is best thought of as a set of approaches to teaching and learning spanning a spectrum. Personalization encompasses a range of instructional approaches, learning experiences and academic-support strategies.

Computational linguistics is the study of appropriate computational approaches to investigating linguistic questions. The terms computational linguistics (CL) and natural language processing (NLP) are often used interchangeably. Computational linguistics/ NLP provides unprecedented approaches to preserve, promote and celebrate languages and linguistic diversity. NLP is the art of solving engineering problems that require analyzing and generating natural language in both written and spoken forms. NLP works on large quantities of existing data in machine readable form.

On This Page

Related Links